Want to grow your business and position your brand for success? One of the greatest ways to do so is hiring a CMO or Chief Marketing Officer, an experienced, result-driven executive responsible for all your marketing efforts.

But with an average annual salary of over $330,000 many small to mid-sized businesses can’t easily afford a CMO. Facing tight budgets and strained resources, these companies must embrace the idea of the fractional CMO and outsource their marketing efforts if they want to grow.

That’s what I want to discuss today. What is a fractional CMO and the services they provide for small to medium-sized businesses.

What is a Fractional CMO

Let’s start by explaining something a bit simpler; What is a CMO?

CMO stands for chief marketing officer, and it’s the job of the person who defines strategic direction and marketing implementation in a business. The concept of a Chief Marketing Officer comes from large enterprises, but it is a role that has grown exponentially in the last couple of years.

A fractional CMO is basically a part-time version of this position. Just like fractional accountants, fractional Chief Financial Officers, or fractional Chief Technology Officers, a fractional Chief Marketing Officer is an executive that leads the marketing strategy and execution of a company on a part-time basis. In our eBook, The Fractional CMO Agency Model, you can learn more about how this works.

What is the difference between a marketing agency, a marketing coach, a consultant, and a Fractional CMO?

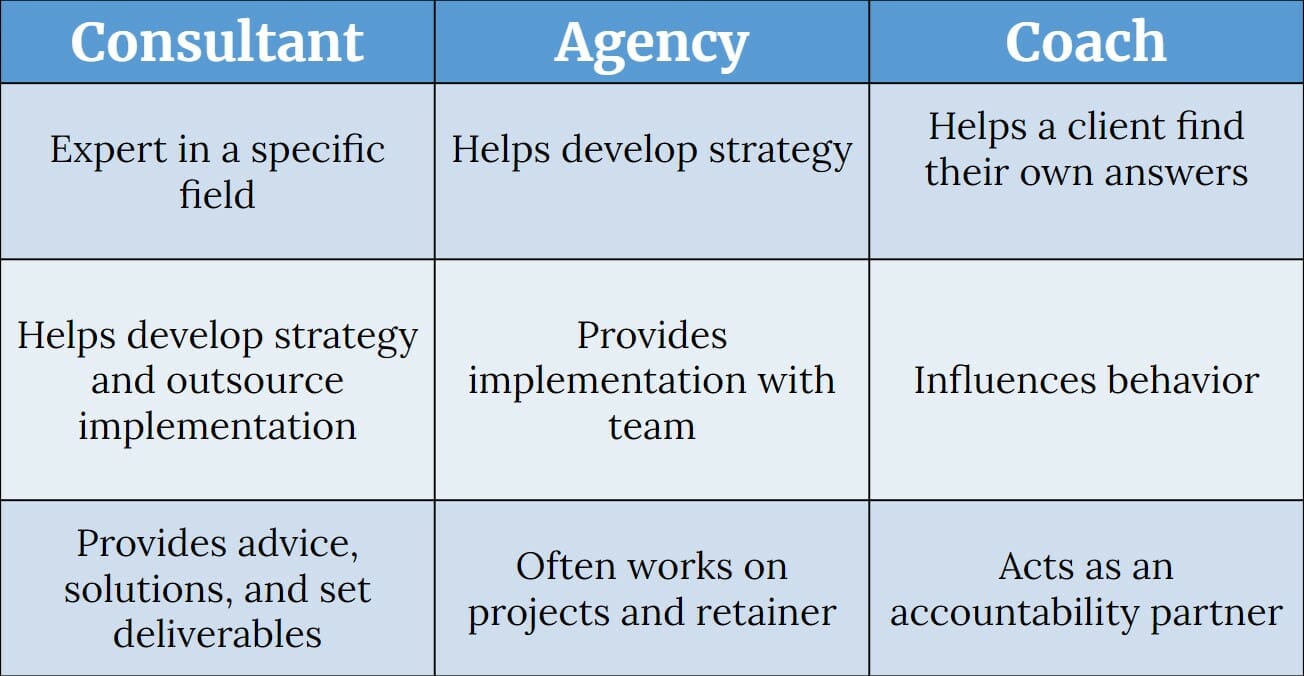

The number of options businesses have to get marketing help can be overwhelming. But if we focus on strategy and implementation, the choices you’ll come across are hiring a marketing agency, a marketing coach, a marketing consultant, or a fractional CMO.

Marketing Agency

A marketing agency is a company that serves various clients in one or more areas of marketing. Agencies sometimes get involved in strategy, but they often focus on the implementation side of the business. They are useful for companies that have a strategy already in place and need some extra help with execution.

Marketing Coach

A marketing coach normally assists business owners or marketing teams with training material, acting as an accountability partner and recommending strategic actions, but is not involved in the implementation at all.

Marketing Consultant

Marketing Consultants are experts in a specific field who help companies by giving advice and developing their marketing strategies. However, they outsource implementation to freelancers or an external marketing team.

Fractional CMOs are particularly relevant today because they have the strategic approach of a consultant, can execute as an agency, and act as a marketing coach for your team, while being accountable for delivering measurable results for your business.

Fractional CMO Responsibilities

A typical Fractional CMO has a mix of responsibilities, all designed to meet the needs of the companies they serve.

These can include:

Defining Goals and developing a marketing strategy

A fractional CMO should be able to develop and communicate clear goals for the marketing team. And align those goals throughout the organization.

Fractional CMOs are also responsible for developing a brand's marketing strategy (budget, vision, team makeup, customer journey, systems, and tactics). They must narrow the marketing focus to crucial marketing channels and formulate a winning and repeatable plan for the business.

Effective fractional CMOs can direct the strategy from a marketing standpoint. For example, they could help define the ideal clients, propose the best markets to target, and choose the most appropriate marketing channels that lead to more business.

This is the most important part of the role because it helps the company stand out from its competition in the minds of their ideal customer.

To develop a winning marketing strategy the fractional CMO should start with these four concepts;

- Identifying the brand's ideal client

- Finding the problem the brand is trying to solve and promise to solve it

- Make content the voice of strategy and establish the brand as an authority online

- Create a complete buyer journey

Fractional CMOs looking for a proven system to help them grow

Business owners looking to hire a fractional CMO to help them grow

Managing the marketing department

Fractional CMOs may have their own team to implement the strategy or be responsible for managing an internal department. Hiring, managing, and sometimes promoting employees are commonly known responsibilities of a fractional CMO.

The job of a fractional CMO is one of the most challenging. The average CMO lasts just 40 months, so they have to be prepared to make an impact fast.

That’s why most fractional CMOs have their own implementation team, and based on our experience training successful marketing managers and consultants, having a network of professionals that can help you execute your plan is essential to achieving consistent growth as an agency.

The Duct Tape Marketing Agency Workshop certifies fractional CMOs to license the complete Duct Tape Marketing System for their agency. Completion of the workshop includes an invitation to a community of like-minded business professionals who come together for strategic partnerships, continued training and events.

Establishing Marketing Metrics and KPIs

A fractional CMO is responsible for establishing clear marketing goals, metrics, milestones, and key performance indicators (KPIs). These are used to measure progress and determine if the marketing team is meeting the goals of their campaigns.

Some of the commonly known metrics for a marketing department are the number of qualified leads in a given period, sign-ups or appointments generated, lead conversion rates, lead-to-customer conversion, cost per lead, monthly recurring revenue, and others.

Metrics can help fractional CMOs understand the challenges, and therefore deliver consistent and long-term results.

Increasing sales and revenue

A Fractional CMO's mission is to increase sales by developing an all-around marketing plan that will help the organization gain a competitive advantage and make more revenue. They are also responsible for overseeing how the marketing budget is spent and managing the quality of the marketing campaigns.

In today’s digital world, Fractional CMOs not only have to be proficient at strategy and execution, but they also need the communication and leadership skills required to motivate and inspire the marketing team and other cross-functional teams.

A vast experience and ability in fields like product marketing, content, brand & design, Pay-Per-Click advertising, paid social, and project management is highly relevant to succeed as a fractional CMO. Also an understanding of commonly used marketing tools like Google Analytics, HubSpot, Salesforce, Marketo, Mailchimp, and others.

Act as a consultant for the marketing team

Successful chief marketing officers, both full-time and fractional, often have a strong sense of purpose and can implement a set of values within the organizations they serve. They are not just order takers, but they are strategists, consultants, and coaches.

Other responsibilities of a Fractional CMO are creating programs that support employees in different ways. For example, they could create and run mentorship programs or training for their team or their clients' teams.

Fractional CMOs might also implement the marketing strategy with their own team of experts. This can be far more effective than attempting to spread out these tasks throughout your company, or creating and hiring a whole new department. As you refine your strategy, you can even productize your services to scale.

If you enjoyed this post, check out our Ultimate Guide to Scaling a Fractional CMO business and Guide to Growing Your Agency or Consulting Business.